123b represents a unique strategy to language modeling. This architecture exploits a transformer-based implementation to generate grammatical output. Engineers from Google DeepMind have created 123b as a robust instrument for a variety of NLP tasks. Implementations of 123b span text summarization Fine-tuning 123b demands extensive collections

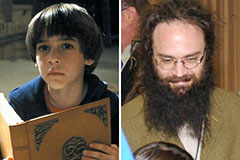

Danny Tamberelli Then & Now!

Danny Tamberelli Then & Now! Barret Oliver Then & Now!

Barret Oliver Then & Now! Katie Holmes Then & Now!

Katie Holmes Then & Now! Rachael Leigh Cook Then & Now!

Rachael Leigh Cook Then & Now! Tonya Harding Then & Now!

Tonya Harding Then & Now!